MoveMusic VR

VR app that lets people make music with body movement.

- VR

- UE4

- C++

- UI/UX

MoveMusic is my initial attempt to create a tool for digital music creation & performance that allows for interaction using body movement. Since 2016, MoveMusic has been my largest passion project. It has taken the form of multiple prototypes over the years, and was previously known as MoveMIDI.

Live musical performance using MoveMusic VR.

In its current form, MoveMusic is a VR MIDI controller app for Meta Quest VR headsets that lets users wirelessly control music software running on an external computer by interacting with a virtual 3D interface. It includes a 3D interface building system to allow users to create custom MIDI controller layouts. I intend to sell the MoveMusic VR app to consumers on the Meta Quest store in 2023.

MoveMusic requires 2 main apps I created:

- MoveMusic VR App: Runs on the VR headset and allows the user to interact with and build custom virtual 3D interfaces. Created using Unreal Engine and C++.

- MMConnect: desktop app to bridge the Wi-Fi connection between the standalone VR system and a computer (macOS/Windows/Linux). Handles sending MIDI data to music software. Created using JUCE and C++.

I developed MoveMusic VR over a period of about 2 years, starting in August 2020 and submitting to the Meta Quest store in November 2022, while working a part-time job.

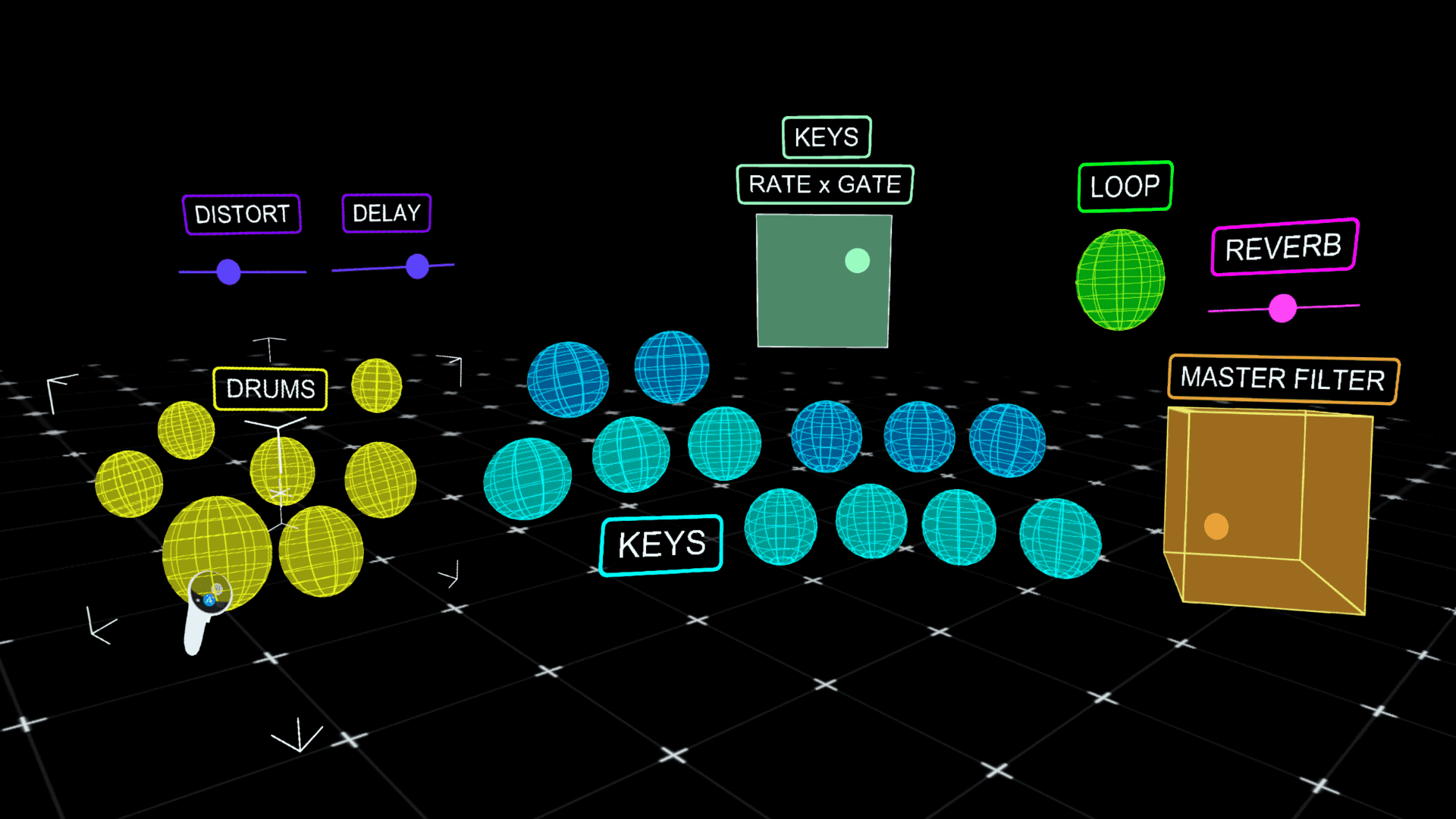

Example of a MoveMusic layout in VR.

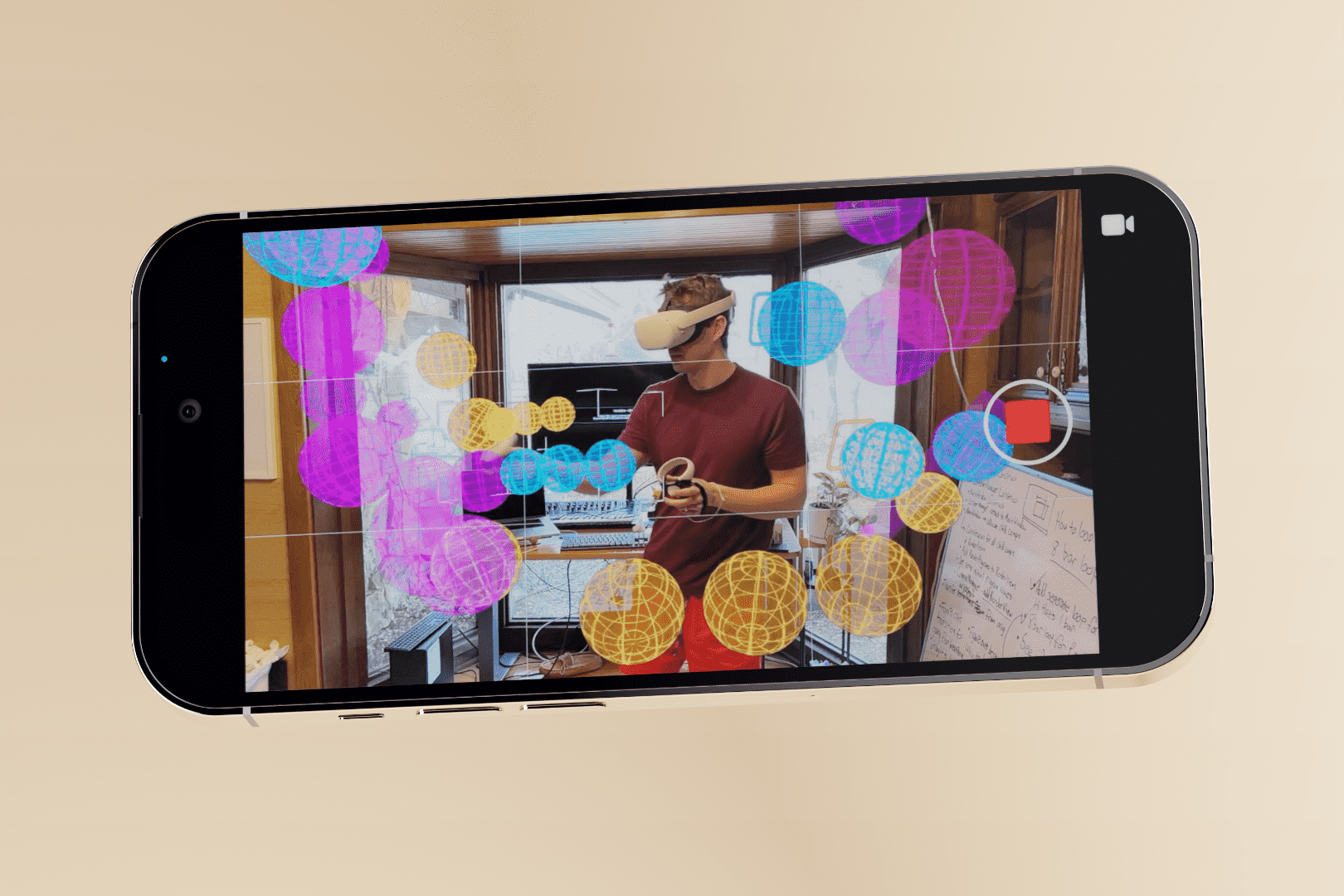

Passthrough Mixed-Reality view of MoveMusic VR.

Technical Accomplishments

Low-Latency Wireless MIDI Control

I originally did not expect it to be possible to send MIDI data with very low latency from a wireless, inside-out tracked VR headset. From my prior experience with Bluetooth MIDI controllers, I observed considerable latency compared to a wired connection. This is why I chose Wi-Fi as the communication method between the VR headset and computer instead of Bluetooth, and I believe it to be a primary contributor MoveMusic's low latency. Another significant contributor to low latency was using a direct Wi-Fi connection between the VR headset and computer.

Direct Wi-Fi Connection

To eliminate latency caused by a Wi-Fi router, I wanted to give users the option to directly connect their VR headset and computer via a Wi-Fi hotspot created by their computer. This feature significantly reduces latency, eliminates the need for users to have external network hardware (e.g. Wi-Fi router or dongle), and allows MoveMusic to be used on-the-go with just a VR headset and laptop. I added a button in MMConnect to toggle broadcasting a Wi-Fi hotspot since most computers are capable of this functionality. This was easy to implement on Windows by taking advantage of Windows' Mobile Hotspot feature. It was more difficult to implement on macOS, but I settled on an AppleScript solution to toggle Internet Sharing settings. I was able to localize the AppleScript solution to work for all supported macOS languages.

iOS App to Record AR Video

To film marketing videos for the MoveMusic VR app, I created an iOS app to help our team record high quality AR-style footage of someone using MoveMusic while being able to see the VR visuals they are interacting with. The app shows a realtime, composited AR visual in the camera viewfinder and is capable of recording 1440p footage at 60 FPS to two separate video files, one for the iOS camera and one for the VR camera, so in post production they can be composited together at a higher quality. The app uses Meta's Oculus MRC system built into their headsets and is based on work by Fabio Dela Antonio in his RealityMixer app.

MoveMusic's Controller Building System

Interface Objects

My goal was to make MoveMusic's controller building system simple, yet open-ended and highly customizable for the user. Instead of providing the user with a set of fixed control modules, such as a piano keyboard or a drum pad array, I opted to provide simple interface objects which could be used as basic building blocks to create more complex, custom controller layouts.

At the time of launch, MoveMusic provides two main types of interface objects from which controller layouts can be built:

- Hit Zones can be hit to send MIDI Notes or CCs. Acts like a button or on/off trigger.

- Morph Zones are spaces you can move your controller through to send MIDI CCs. Acts as a range-based control that can take the form of sliders, XY pads, or 3D XYZ cubes. You hold down the trigger button on your controller to use a Morph Zone.

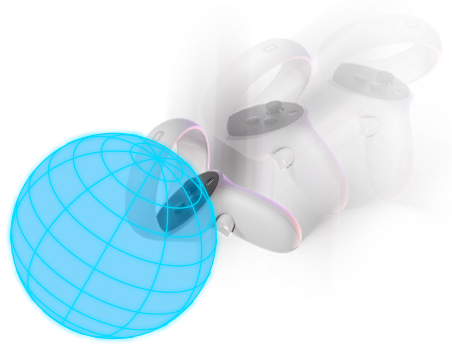

Hit Zone

Morph Zone

Layout Authoring

Users can create custom controller layouts using their VR controllers to add, remove, position, group, and edit interface objects. To accommodate the wide range of actions a user can perform on the layout, gesture interaction is used and the virtual handheld controller models show contextual button icons depending on the current interaction state.

To place individual interface objects, the user presses a button on their VR controller. They can select the type of object to add using a pop-up point & click menu. After initial placement, the object can be moved and oriented anywhere with a grab gesture by pressing and holding the VR controller grip button near the object and moving the controller. Similarly, any object can be scaled by using a two-hand pull/push gesture to scale up/down respectively. Multiple objects can be selected by holding down the grip button and moving the controller into the objects. A selection can be grouped by grabbing the selection and pressing the contextual group button that appears on the VR controller.

Inspector

To customize interface object behaviors and aesthetics, the Inspector, a point-and-click menu, is provided. It respects the current object selection, allowing multiple objects to be edited simultaneously. The Inspector is probably my least favorite part about MoveMusic. Although I think it is efficient enough to get the job done, there are more effective ways to present some of these editing controls. For example, I think the way a MIDI Note is set in the Inspector could be improved. Currently, a MIDI Note is set using a number stepper/slider that displays a textual note number/letter value. If I were to expand this feature in the future to allow users to add multiple MIDI notes, management of many MIDI notes could get cumbersome as the user would need to scroll through a list of MIDI note numbers and pick the right ones. Instead, it might be better to present MIDI Notes using a more common musical UI, piano keys. Instead of a point-and-click menu to set the MIDI notes, the user could see a floating 3D piano key roll which would allow them to toggle which notes should be present.

My Story with MoveMusic

The story of MoveMusic's development intertwines with the story of my young adult life. The project is very special to me and has helped teach me that many skills can be learned if you put in the effort and that a task can be started before knowing everything needed to accomplish it. Our ideas are not as out of reach as we may think. Working on the project has been a melding of all my creative passions of design, software engineering, and music.

I prototyped my first MoveMusic idea on Christmas break of my junior year of university. I was majoring in computer science and had developed a passion for coding after my first class covering C++ programming. Back at home with my family for the break, I noticed the PlayStation Move controllers we often used to play our favorite virtual disc golf game. I had always been impressed with the PlayStation Move controllers, in particular, their 3D position tracking was better than anything I had ever seen in a video game motion controller. The PS Move system accomplished 3D position tracking using the PlayStation Eye camera to track the glowing orbs on the tops of the Move controllers.

PlayStation Move hardware. Left: two handheld PlayStation Move motion controllers. Right: PlayStation Eye USB camera. Image © Sony Interactive Entertainment LLC

I started thinking about how these PlayStation Move controllers could be used for music. I'd seen people use Wii controllers for MIDI control by rotating the controller to change various audio parameters. But I thought using the PlayStation Move controllers could offer more interaction possibilities given their 3D position tracking. I did some searching and found the PSMove API by Thomas Perl which would allow me to interface with the PlayStation Move hardware via a C++ API on macOS and Windows. Having recently learned the JUCE C++ GUI/audio app framework for a summer job making music software for a museum, I felt confident I could create a MIDI app utilizing the Move controllers.

During Christmas break I hacked away and was able to create a very basic app that allowed me to play a few drum sounds at different horizontal positions and allowed me to control 3 music parameters based on the 3D position of one of the controllers in view of the camera. Although very basic, I was able to make a performance featuring the prototype during that same Christmas break. I still remember the first moment I waved my arm from left to right to control an audio filter in Ableton and was awestruck that I was able to control sound using my arm movement with such precision and expression! This prototype was the spark of the project.

My first performance using MoveMIDI prototype #1

The prototype very quickly became my passion project and I called it MoveMIDI. Soon I made a logo for the project that I still use today. The logo takes inspiration from the PlayStation Move logo which looks like a letter "A", but I made MoveMIDI's logo look like an "M" and added a sick gradient to each segment to make it look 3D.

MoveMusic Logo

PlayStation Move Logo

(trademarks of Sony Interactive Entertainment LLC)

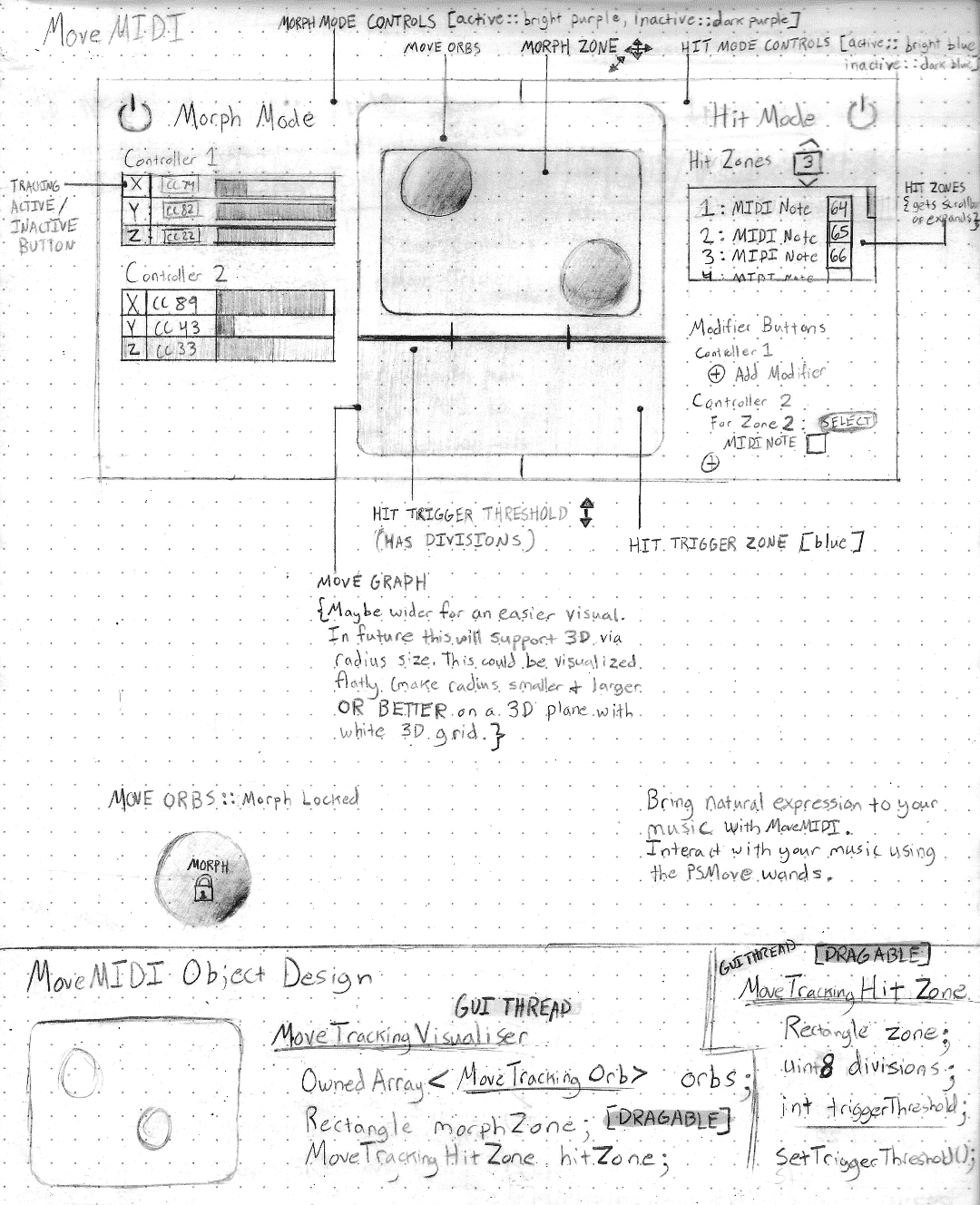

In my free time, I continued designing & coding new ideas for MoveMIDI's interaction and UI. I began with hand-drawn sketches, moved to prototyping UI designs in Sketch design software, and coded UI in C++ and JUCE. I iteratively switched between designing and coding phases. Often, design informed what I would code, but sometimes coding would help me realize a better design idea.

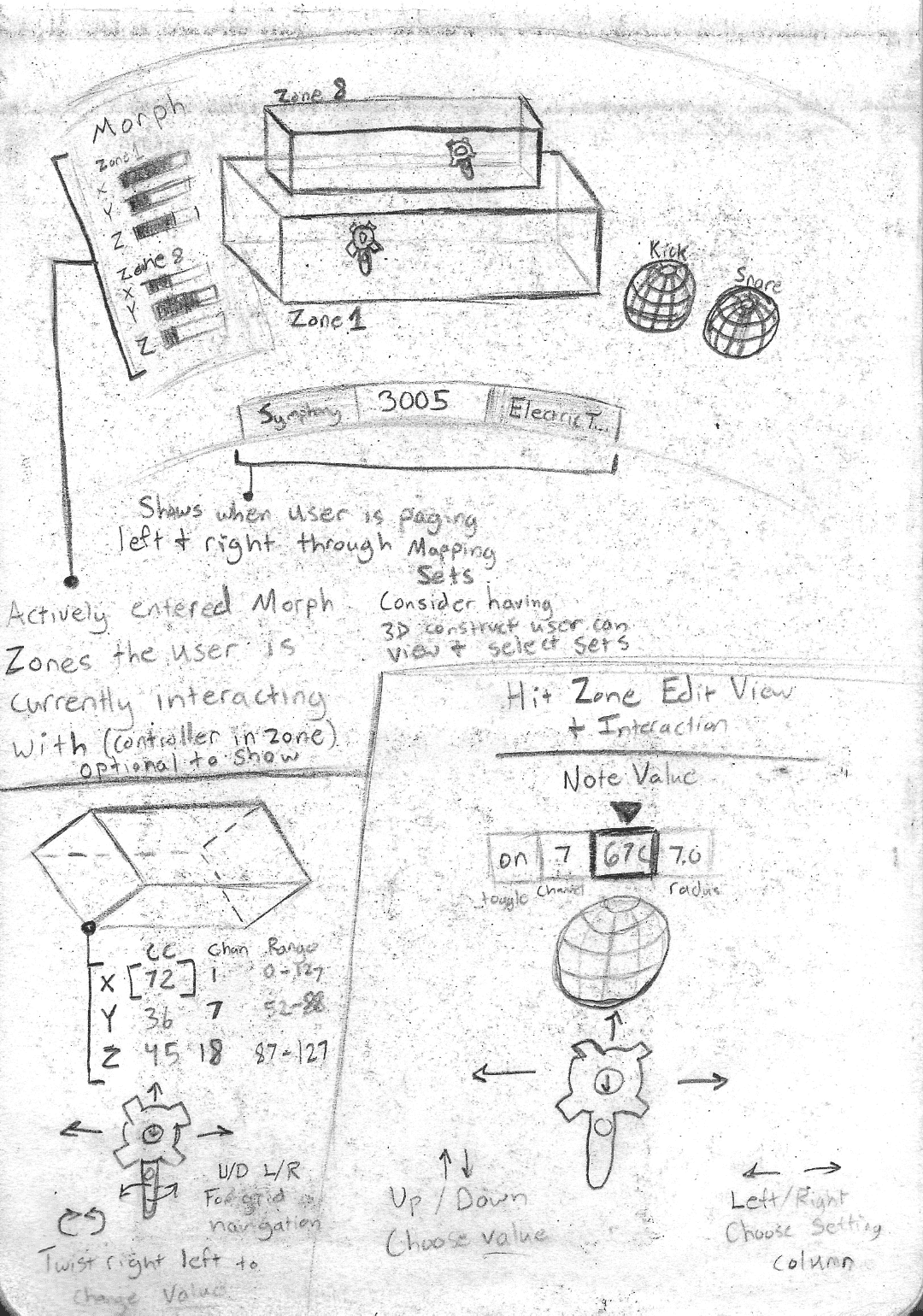

Two sketched UI ideas. First: The first UI sketch I made for MoveMIDI software. Second: A later sketch for MoveMusic's VR interactions and UI.

The second prototype of MoveMIDI came together while taking Dr. Poor's Computer Graphics class my junior year at Baylor University. One of my favorite classes, it taught me the fundamentals of 3D graphics and linear algebra which were crucial to my continued exploration into 3D and later VR. In class we learned the OpenGL 3D graphics API which I then used in MoveMIDI to add a 3D visualization of Hit Zones and 3D controller positions.

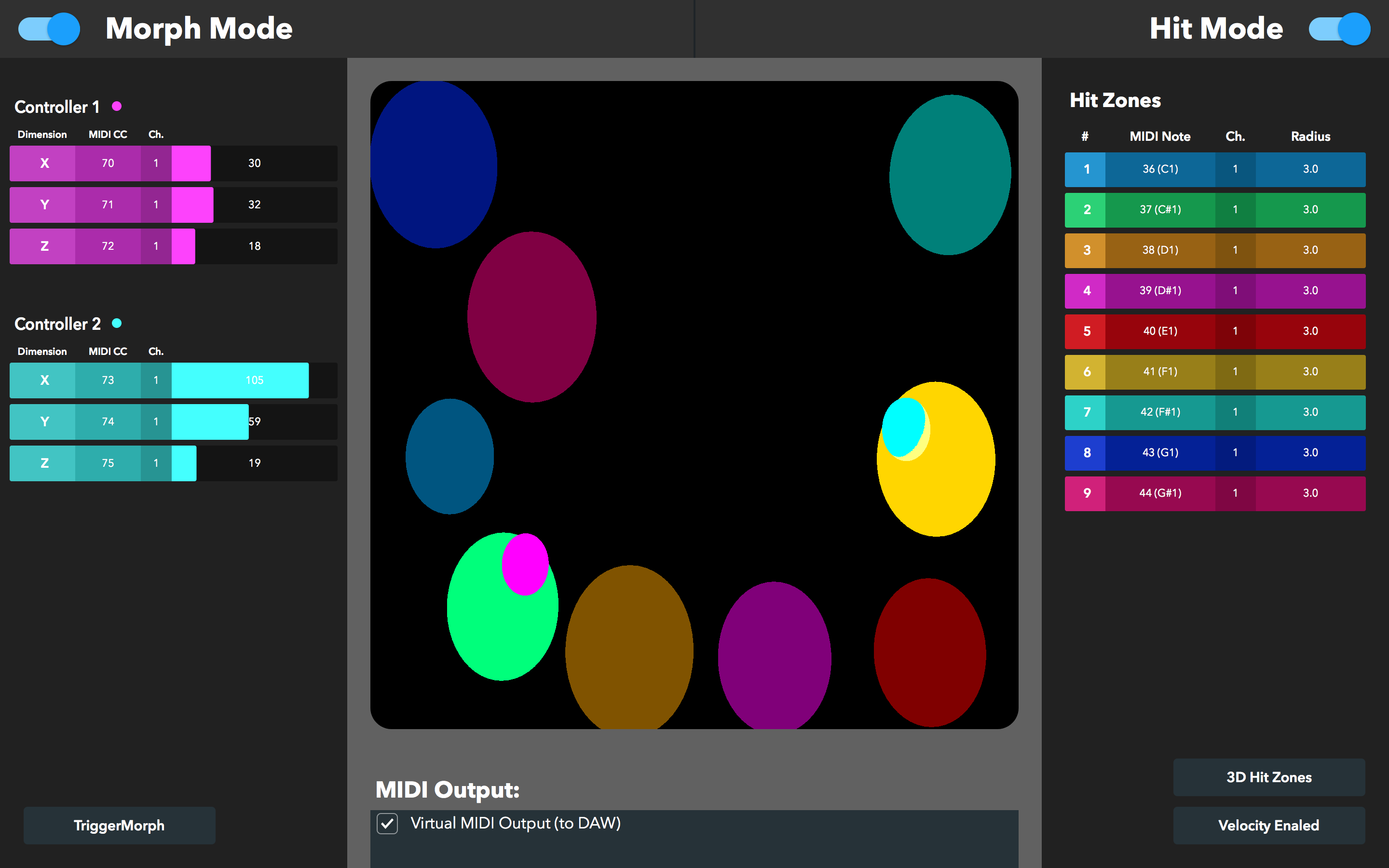

Screenshot of GUI of MoveMIDI prototype #2 made with JUCE and OpenGL in C++.

As I continued working on the project, new opportunities and relationships presented themselves. I met my friend Josh Gledhill in the YouTube comments of that first MoveMIDI performance. Josh researched similar music interaction techniques using XBox Kinect and worked with a glove-based musical instrument, the Mi-Mu Gloves. From Josh and others I began to learn about similar projects in the music tech and research space. Some of the most inspiring work I came across includes Imogen Heap's Mi-Mu Gloves, Elena Jessop's VAMP glove, the works of Tom Mitchell, and the works of Adam Stark, just to name a few.

Early users of the MoveMIDI prototype.

Sanjay Christo used MoveMIDI with the Artiphon Instrument 1 to bow it like a cello.

Jeff Firman used MoveMIDI to control stage lighting with arm movements.

Eventually, I talked with my professor, Dr. Poor, about his research in human-computer interaction and shared my MoveMIDI project with him. He encouraged me to pursue academic research in human-computer interaction. While completing my undergraduate degree, I wrote a late-breaking work research paper about MoveMIDI and submitted it to the CHI 2019 conference as a poster and interactive presentation and it was accepted for both entries! I was able to attend the conference in Scotland, and to help pay for the trip, I applied to be a student worker which gave me the opportunity to meet one of my best friends, Dave Dey, who researches human interaction with self-driving cars. The conference was inspiring and it was a beautiful opportunity to meet people innovating to improve the daily lives of others.

These experiences led me to pursue a masters degree at Baylor while researching human-computer interaction with Dr. Poor. In our research we performed a user study evaluating users' rhythmic accuracy performing rhythms with MoveMIDI. I also had the opportunity to work on a 3rd MoveMIDI prototype for the Oculus Quest VR system. This prototype was the closest to the current-day MoveMusic system. Using the native Oculus SDK, I wrote C++ code to create a VR prototype where you could place any number of spherical hit zones in any 3D position around you and hit them to trigger MIDI notes. I was finally freed from needing to face a camera in one direction and could interact fully in a 3D space, above my head, near my feet, etc! Additionally I made a basic JUCE app with C++ to receive OSC messages from the VR headset via Wi-Fi and translate them to MIDI messages to control music software. The VR visuals were horrible and interactions simple but this prototype proved to me it was possible to have low-latency MIDI control from a wireless VR headset!

Videos of the 2nd MoveMIDI prototype.

While in graduate school, MoveMIDI continued to present me with new opportunities. In 2018 I was selected to participate in the Red Bull Hack the Hits hackathon in Chicago where students with varying backgrounds in music & technology worked together to create new musical instruments. Our team created a 3-tiered DJ turntable! Later that year, my MoveMIDI software won the 2018 JUCE Award which provided me with a free ticket to attend the 2019 Audio Developer Conference (ADC) in London. At ADC'19 I presented MoveMIDI as an interactive demo and presented a poster about gestural interaction for music with Balandino Di Donato who also researches music tech including gestural controllers. I also got to meet some of my music tech heroes Tom Mitchell and Adam Stark who were presenting the Mi-Mu Gloves as an interactive demo at the conference! I even got the opportunity to pitch my MoveMIDI business as 1 of 10 companies invited to the ADC'19 pitch event. But even better than all that, I got to propose to McKenna, my now wife, who joined me for the trip to London! I proposed in the beautiful Kew Gardens and it was the end of Fall. What an amazing trip!

After graduating, I began working part-time for Future Audio Workshop making SubLabXL while using my extra time to turn MoveMIDI into an app that I could sell to consumers. I renamed the project to MoveMusic and began developing with the intention of making a VR app to sell on the Meta Quest VR store. After almost two years of work I have the app ready to sell and am waiting on review from the Meta store team to publish the app.

The Future

I am excited to finally release MoveMusic VR after all of these years of exploration and experimentation, but I am even more excited to improve the product as I look forward to the future. With the continued development of AR headsets and full-body tracking, I hope to one day build a system that allows people to create music by dancing. Today, my MoveMusic product feels like the first step in my attempt to realize that vision. I am excited to see what we will create in the future. Thank you for reading my story :)

MoveMIDI Video Archive

To provide more reference material of my past work on the project, I've created a YouTube playlist of old MoveMIDI-related videos.