Playful Instruments

Music software instruments for a children’s museum exhibit

- JUCE

- C++

- UI/UX

In 2016, I created two musical software instruments for a children's museum exhibit at the Mayborn Museum at Baylor University. The first app I made was integrated into a giant piano you could play with your feet and allowed the user to pick between 4 sounds. The second app was integrated into a large touch screen, allowing people to drag on the screen to play a pure-tone pitch. Both apps showed visualizations of the audio including an oscilloscope, audio wave scroll, and a frequency spectrum. I created these cross-platform apps using JUCE and C++. Implementation involved sampling & synthesis techniques, multi-threading, and lock-free programming. I designed and coded the UI with consistent client feedback. This was my first experience using the JUCE audio app framework.

My grandparents and I at the Giant Piano exhibit with my software.

Taking on the Project

The Mayborn Museum sent an email to all the computer science students at Baylor asking if anybody was interested in a job to code a pair of audio instrument applications for an exhibit. I heard about the project and, having no prior experience in audio software development but a passion for both audio/music & coding, I applied anyway. I was soon dissuaded by a family member to not waste my time (and possibly money) on a project that I had no prior experience to back up. But, I was so excited to learn about the topic and had confidence I could figure it out, so I took on the project anyway. This decision very much impacted the course of my career and I am very glad I made it.

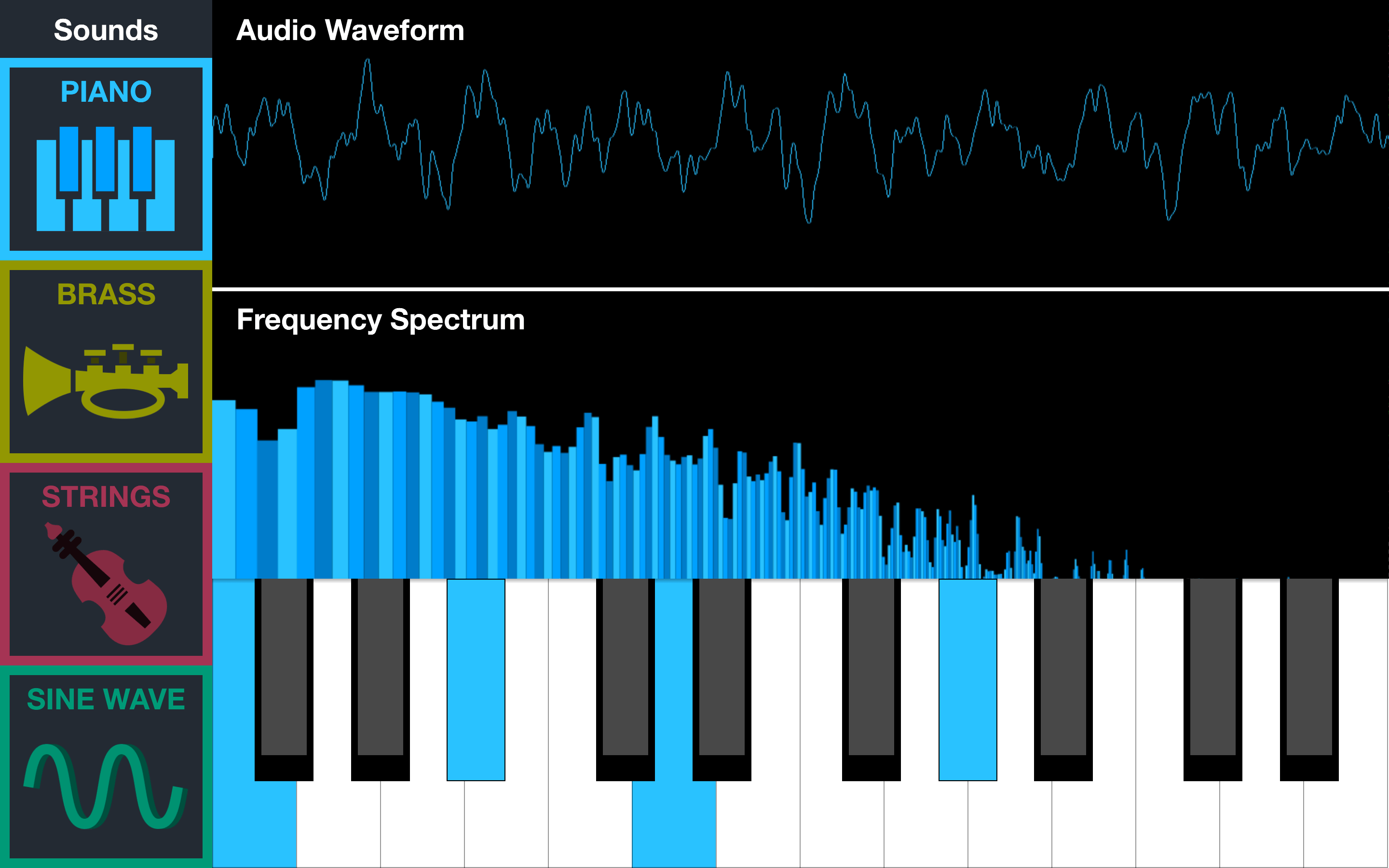

App 1: Giant Piano

After being hired for the job, the first application I was assigned to make was a multi-voice, sampler instrument that plays four different tones (piano, brass, strings, and sine synth) and is triggered by a keyboard input. The application also shows two visualizations of the generated audio on the screen (oscilloscope and frequency spectrum displays). For the final exhibit, my program was connected to giant piano keyboard that is played with the users’ feet which was used to trigger the notes and sounds. This aspect obviously made the project like ten times cooler.

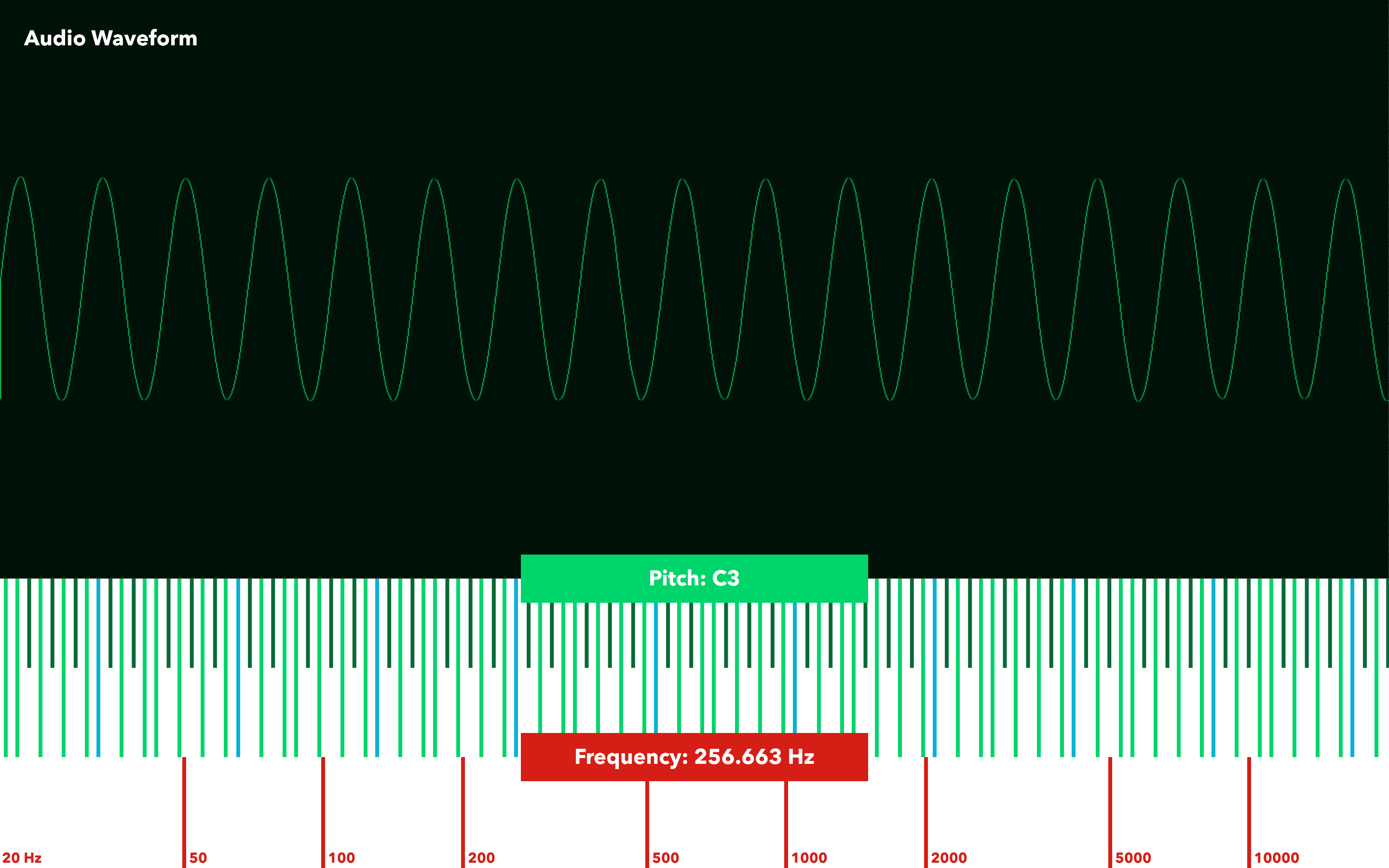

App 2: Measuring Sound

This second instrument application I created for the exhibit is meant to teach children about the basic relationship between pitch an frequency of a sound. Played on a touch screen, the program generates a pure tone (synthesized sine wave) that rises in pitch as the users’ finger moves from left to right across the screen. The application displays a visualization of this tone via an oscilloscope to show how the waveform changes with pitch. A graph across the bottom shows the relationship between pitch and frequency as the user moves their finger around on the touchscreen. Finally a microphone input allows the user to see how their voice affects the generated tone in the oscilloscope visualizer.